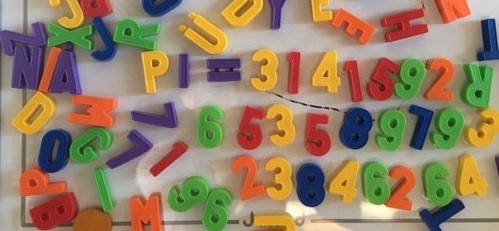

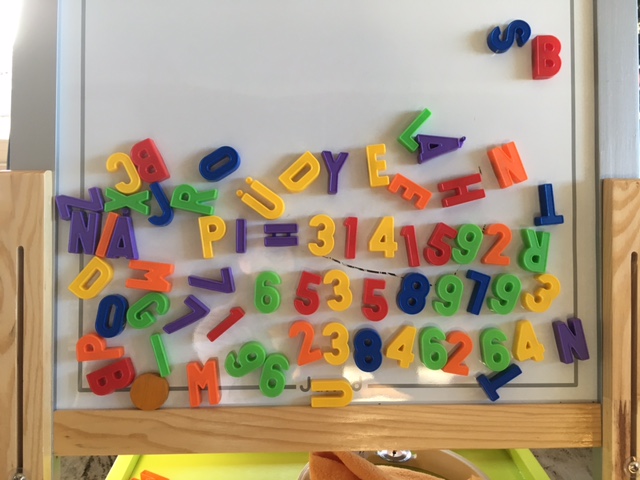

It’s pi day and time for another post. On Christmas 2016 my niece and my nephew got a small whiteboard with colorful magnetic letters as a present. After playing a while with my nephew, the board looked like this:

As you can see, I tried to write down the first digits of pi, while my fourteen months old nephew prettily, but otherwise randomly, arranged some magnets around my work. Since the digits of pi are also expected to be randomly distributed, this made me think about the difference of my own and my nephew’s contributions to the picture.

Shannon Information Entropy

In 1948 Claude Shannon introduced a quantity called information entropy, which can be used to quantify the information content of a message. Information entropy is maximised if the letters of an alphabet appear randomly in a message. If there exist correlations between letters, e.g. if it’s more likely that the letter t is followed by an h rather than by a q, then the entropy of a message is not at its maximum. Such messages (like the text of this post) can consequently be compressed into shorter strings by some decent compression algorithm.

If you compute the Shannon entropy of my own and my nephew’s contributions to the above picture, you should find that both messages are close to the theoretical maximum because in both cases the letters are statistically random samples of a given alphabet. Naively interpreting information entropy as information content would lead to the wrong conclusion that both messages are very useful pieces of information. While this is true for the digits of pi (you can use it for example to compute the volume of a sphere), it’s entirely wrong for the randomly arranged letters on the board. This example demonstrates that information must be more than a matter of statistics!

Beyond Statistics

My nephew is definitely able to exchange meaningful information with me or his parents, be it in the form of gestures or oral language. But what he is currently missing is the knowledge about the syntax and semantics of written language. It will take a few more years till he learns that the curly shape of a 3 is the Arabic representation of the number three. He will have to be taught that in our culture words are read from left to right, that a stands for the vowel “a” and that the word strawberry designates the red fruit he likes to eat. Only having been inaugurated to these secrets of English language, he will be able to share information with others in written form.

Syntax and Semantics in the DNA

Syntax and semantics are also found in the genome of plants, animals, and humans. A syntactical rule of the DNA is for example that words are always made up of three letters. Out of the four letters of the DNA (the nucleobases adenine, cytosine, guanine, and thymine) 64 different words can be formed. The semantics determines to which of the twenty amino acids a given three-letter word corresponds to. The word GCC translates for example to the amino acid Alanine.

The magnetic letters arranged by my nephew contain no information, since he is not yet aware of any syntactical and semantic rules. In the same way, any sequence of nucleobases created by natural processes (which are unaware of syntactical and semantic rules) must be void of information. Nonetheless, most biologists believe that the genome of any living being with all its information to build the most stunning molecular machines originates from impersonal and random events. Imho there are strong reasons to be skeptical about such kind of beliefs.

Comments by Pipi